Leveraging Langchain for RAG: Build Intelligent PDF-Based AI Systems

1. Understanding the Limitations of Traditional Generative AI

2. The Power of Retrieval-Augmented Generation (RAG)

3. Real-World Examples

3.1. E-commerce Product Information

3.2. Legal Document Analysis

4. Implementing a RAG System with ChatGPT and FAISS

4.1. PDF Processing

4.2. Embedding Creation

4.3. Retrieval and Generation

4.4. Alternative Components

5. Conclusion

Understanding the Limitations of Traditional Generative AI

Traditional generative AI models are incredibly powerful tools capable of producing human-like text across various contexts. However, they often face challenges with factual accuracy and consistency, largely due to the rapidly changing nature of information in the real world.

For instance, a language model trained on a large dataset might confidently assert that a product is still available, even if it has been discontinued. This occurs because the model’s knowledge is based on a fixed snapshot of the world at a specific point in time. Once trained, the model does not have access to new data, which can lead to outdated or incorrect information.

The Power of Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) offers a promising solution to the limitations of traditional generative AI models. RAG enhances the generative process by combining the strengths of information retrieval systems with those of generative AI, resulting in more accurate, relevant, and informative outputs.

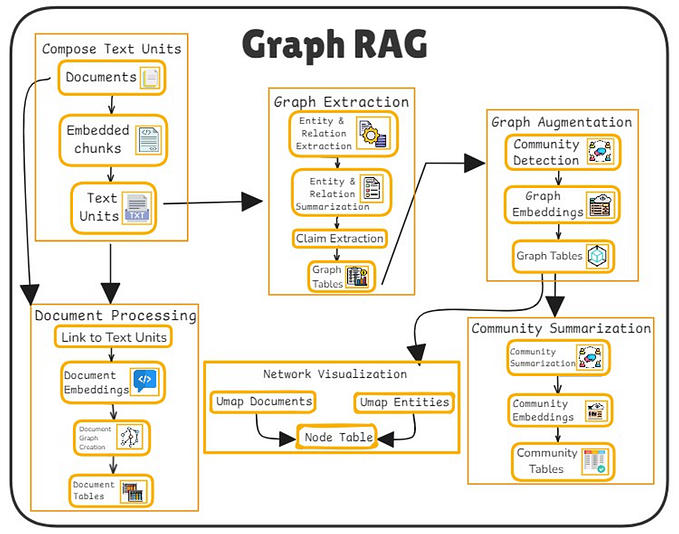

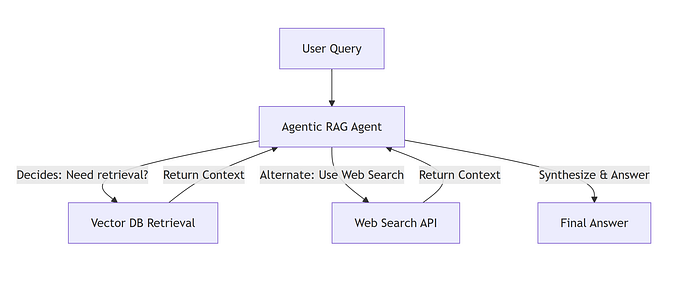

At its core, RAG involves two key steps:

- Retrieval: When a user presents a query, the system first searches through a relevant knowledge base — such as a collection of documents, articles, or databases — to identify the most pertinent information. This step ensures that the information used to generate the response is up-to-date and contextually relevant.

- Generation: The retrieved information is then combined with the original query and fed into a generative AI model. This model generates a response based on both the query and the retrieved context, ensuring that the answer is grounded in factual data.

By incorporating this retrieval step, RAG significantly enhances the capabilities of generative AI models. It helps ensure that the generated content is grounded in factual data, reducing the risk of hallucinations (where the model generates plausible but incorrect information) and inconsistencies.

Real-World Examples

E-commerce Product Information

Consider an e-commerce platform that uses a chatbot to answer customer queries about products. A traditional generative AI chatbot might provide incorrect product details if the product information has been updated since the model’s training. However, a RAG-powered chatbot can access a real-time product database to retrieve the latest information before generating a response. This ensures that customers receive accurate and up-to-date product details.

By bridging the gap between static knowledge and dynamic information, RAG offers a promising approach to enhancing the capabilities of generative AI models across various applications.

Legal Document Analysis

Imagine a legal professional who needs to quickly find information in a lengthy legal contract. A RAG-powered system using ChatGPT and FAISS could:

- Extract key clauses and provisions from the contract.

- Create embeddings for each clause to facilitate efficient search.

- Allow the user to ask questions about the contract, such as “What is the termination clause?”

- Retrieve relevant clauses and generate a concise summary or answer based on the query.

Implementing a RAG System with ChatGPT and FAISS

To build a RAG system, we can use ChatGPT for the language model and FAISS for the embedding database. Here’s an overview of the process:

1. PDF Processing

- Extraction: Extract text from PDF documents while preserving structure and formatting.

- Chunking: Divide the text into manageable chunks for embedding (Adjust parameter values as per your pdf document).

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import CharacterTextSplitter# Load PDF data

exercise_loader = PyPDFLoader("Exercise.pdf")

exercise_data = exercise_loader.load_and_split()# Split text into chunks

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

exercise_documents = text_splitter.split_documents(exercise_data)

2. Embedding Creation

- Vectorization: Convert text chunks into numerical embeddings using a model.

- Indexing: Store embeddings in FAISS for efficient retrieval.

from langchain.embeddings import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS# Create embeddings

embeddings = OpenAIEmbeddings(api_key="API_KEY")

exercise_pdfsearch = FAISS.from_documents(exercise_documents, embeddings)3. Retrieval and Generation

- RetrievalQA Chain: Used for direct question-answering tasks where the system retrieves relevant documents and generates a response based on those documents. Ideal for straightforward QA scenarios requiring precise answers.

- ConversationalRetrievalChain: Designed for interactive and context-aware dialogues. Maintains conversation history and returns source documents along with generated responses. Suitable for ongoing interactions and contextual understanding.

from langchain.chains import RetrievalQA, ConversationalRetrievalChain

from langchain.chat_models import ChatOpenAI# Initialize ChatGPT

llm = ChatOpenAI(temperature=0.0, openai_api_key="API_KEY", model="gpt-3.5-turbo")# Create QA chain

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

retriever=exercise_pdfsearch.as_retriever(search_kwargs={"score_threshold": 0.7})

)# Create conversational chain

con_r_chain = ConversationalRetrievalChain.from_llm(

llm=ChatOpenAI(temperature=0, openai_api_key="API_KEY"),

retriever=exercise_pdfsearch.as_retriever(search_kwargs={"score_threshold": 0.7}),

return_source_documents=True

)# Query example

chat_history = []

query = "list down fruits we can eat"

result = con_r_chain({"question": query, "chat_history": chat_history})

chat_history.append((query, result["answer"]))

print(result['answer'])

4. Alternative Components

Text Splitters:

- CharacterTextSplitter: Splits text into chunks of a fixed size. Simple and effective for many use cases.

- RecursiveCharacterTextSplitter: Uses recursive rules to split text based on structure, useful for more organized documents.

- LanguageModelTextSplitter: Utilizes language models to determine chunk boundaries, suitable for diverse text types.

Embedding Databases:

- FAISS: Efficient for large-scale similarity search and retrieval.

- Chroma: Optimized for fast search and retrieval, suitable for real-time applications.

- DocArrayInMemorySearch: Stores embeddings in memory, ideal for small-scale applications where persistence is less critical.

Conclusion

RAG represents a significant advancement in the field of AI, enabling systems to generate content that is not only coherent and contextually appropriate but also accurate and verifiable. By combining retrieval and generation, RAG systems can transform the way we interact with AI, making it a valuable tool for applications ranging from customer service to complex document analysis.

By integrating ChatGPT with FAISS, developers can build robust RAG systems that leverage both real-time data retrieval and advanced generative capabilities. This hybrid approach is particularly valuable in domains where factual correctness and source attribution are critical.

Read my all blogs at : https://shyampatel1320.medium.com/

Feel free to share your thoughts or connect with me on LinkedIn to continue the conversation.